Introduction

I have an Unraid server running some pretty basic hardware:

- i5-3570K with a P8Z77-V LX

- 16GB DDR3

- Drives:

- Parity drive: 4TB Seagate IronWolf HDD

- Cache drive: Kingston 480GB A400 SSD

- Total Capacity: 16TB (5 array disks)

- 2TB download cache (HDD)

The server runs more than 30 Docker containers and can support multiple simultanious Plex transcodes. It hosts a few websites, some gaming servers, file sharing, LLM proxies and chat clients, automated backups and more.

On a regular day, the server is barely busy, but it can handle large workloads like transcoding and moving large video files with ease. It’s taken me a few years to dial it in to this point but I’ve finally got it running smoothly, so I figured it was time to summarize some of the methods I used to boost performance and reliability at home!

General

Performance Tips

General performance tips for Unraid:

- Don’t **** with Unraid’s Network

- Reduce I/O traffic on your appdata cache disk as much as possible

- Distribute workloads across multiple disks

- Prioritize containers with resource limits

- Understand and utilize your server’s Schedule

Use External Networking Resources

If you host a pihole or some sort of network infrastructure that Unraid relies on, do not do so on Unraid, especially if those systems run in Docker. Basically, Unraid shouldn’t host things it depends on.

Monitor your Server

However you do it, you should install some sort of additional monitoring than what comes out of the box with Unraid.

I suggest using Grafana and Prometheus. These are ok to run on Unraid, but doing so does mean you will have limited metrics during complete failures.

Measuring the impact of your changes is key to improving your system performance, and good metrics make that easy (and sometimes show you things you weren’t looking for!)

Disk Management

So you have a pile of 3.5” HDDs and a couple of SSDs. You want to use them all, but you don’t want to waste space or performance. Unraid has a lot of options for managing your disks, and it can be a bit overwhelming at first. Here are some tips to help you get started or optimize your existing setup.

-

Use your fastest SSDs for your Cache

Even a 250GB SSD is sufficient if you’re only storing appdata and other system resources on it. -

There are 2 major constraints to consider when selecting your parity drive:

- Speed: The array will only be as fast as the slowest disk, and the parity drive must be faster than it.

- Size: The largest data drive you can add to the array is equal to the size of your parity drive.

Therefore, I suggest you use the largest, fastest HDD you have for your parity drive. An SSD will not be faster and it may wear the SSD faster.

If you are going to invest in any new hardware, I’d suggest you invest in a Seagate IronWolf HDD at a suitable capacity.

-

Create Pools for Different Workloads Pools can help distribute the workload of a server across multiple disks, reducing time spent in iowait.

Any data you don’t need on the array should be on a pool. For example:

- A “Downloads” pool can store torrents and other downloads. This prevents write traffic from affecting the cache or the array.

- A “VMs” pool can store VM data or other large disk images that may have their own redundancy or may not require it.

You can use the mover,

rsync, or the Dynamix File Manager plugin to move data between your pools and the array as required. -

Enable Reconstruct Write Also known as Turbo Write, reconstruct write allows Unraid to write data to the array without having to read it first. This can improve write speeds, but comes with limitations and drawbacks. Read more here.

I don’t use this feature, but if you have a lot of data to write to the array, it may be worth considering.

Docker

Configure Resource Limits

Docker comes with built in support for limiting the resources available to each container.

To limit the CPU and memory available to a container, you can use the --cpus and --memory flags when creating a container. These can be set in the Unraid UI when editing a container by enabling “Advanced Settings”. Add these flags under “Extra Parameters”. For example:

# Limit to 1 CPU and 1GB of memory

--cpus=1 --memory=1GIn my case, I do not have swap enabled, I got the error:

Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

I was able to resolve this by adding the flag --memory-swap=-1. Note that this may not be the right setting for your use case. See the docs here to determine the right value based on your needs.

In my case, I have 16GB of RAM. I allocated a max of:

- 4GB for Plex

- 1GB for several more complex apps that have their own database, UI, etc.

- 500m for small services like proxies, dynamic DNS, etc.

For a few containers that I want to be really low priority, I also set --cpus=1. I’m unsure how this works if you also use CPU pinning, but I assume either will limit the CPU usage to ~1 core.

To see the current resource limits on your containers, you can run the following command:

docker container statsAppdata

Cache Dat App

In Unraid, each Docker container you create has an appdata folder (typically stored at /mnt/user/appdata). This contains configuration files, logs, and other assets specific to each container. You can think of this as a “home folder” for each container. As such, the containers rely heavily on the data inside this folder. That usually means we can expect a high number of reads and writes to this folder.

Caching the appdata folder is critical for the performance of your server. Put another way, storing live appdata on the array is bad. For “parity”, we should back up appdata to our array using a scheduled rsync or the Appdata Backup plugin.

First, we ensure your appdata folder only uses the cache:

- Stop the Docker service in Unraid (Settings > Docker > Enable Docker: No)

- Ensure appdata is on the cache. Go to shares > appdata and configure it with: the following settings:

- Primary Storage: Cache

- Secondary Storage: Array

- Mover action: Array -> Cache

- Run the mover to move the appdata folder to the cache drive

- Go back to shares > appdata and configure it with the following settings:

- Primary Storage: Cache

- Secondary Storage: None

This is a good start, but writing to /mnt/user/appdata still writes through Unraid’s user share, does have some overhead. Instead, we can update our Docker configuration to use the cache directly since it’s just one disk.

Next, we can update each Docker container’s appdata folder mapping to point to /mnt/cache/appdata/<container-name> instead of /mnt/user/appdata/<container-name>. This will bypass the user share and write directly to the cache drive.

Write Logs to RAM

A massive number of the writes to the appdata folder are logs. These logs are typically not needed after a restart (especially if you’re storing them elsewhere, such as Loki) so we can safely write them to RAM instead of the array.

To improve this, we can use the tmpfs mount option to tell Docker to write log files to a temporary filesystem in RAM that will store our logs and be deleted when the server is restarted. Writes to this filesystem are much faster than writing to the array, and it will reduce the write traffic to your cache significantly.

There are two types of logs we need to consider:

Container logs

These are the logs that Docker creates for each container. They are typically stored in /var/lib/docker/containers/<container-id>/<container-id>-json.log, which is often on our cache.

Docker doesn’t provide a way for us to customize where these are stored, and I believe /var/lib/docker is on a tmpfs mount by default. However, we can use the local driver as a more efficient way to store these logs.

To do this, you can either:

-

Add the

--log-driverflag to your container when creating it (docs)--log-driver=localor;

-

Globally configure Docker to use the

localmount option for all containers. This is done by adding the following to yourdaemon.jsonfile (typically located at/etc/docker/daemon.json):{ "log-driver": "local" }In Unraid, this won’t persist past reboot. To fix this, you can add the following to your

gofile in/boot/config/go:# Docker Configuration mkdir -p /etc/docker tee /etc/docker/daemon.json <<EOF { "log-driver": "local" } EOF

Application logs

These are the logs that the application inside the container creates. Not every container creates these, and if they don’t write logs to the appdata folder, we’d never see them. However, many applications write their own log files to their appdata folder, and these are our target.

To start, create a folder in RAM to store the logs. I did this for all Unraid logs with:

mount -o remount,size=4G /var/log(Keep in mind that this is in RAM, which means it must be kept small. Review your applications to ensure they have proper log rotation and cleanup)

You can configure this to run automatically on boot by adding this command to the go file in /boot/config/go or using the User Scripts plugin.

Then, for each container:

-

Check its

appdatafolder for log files:ls /mnt/user/appdata/<container-name>/*.log -

Remap any log files here to point to a folder under

/var/log-

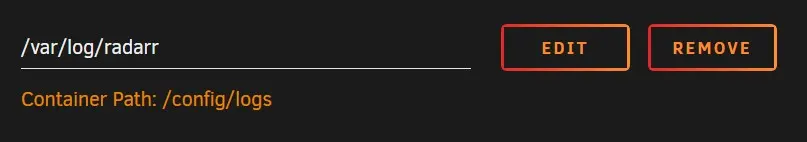

If there’s a

logsfolder, simply map it to/var/log/<container-name>

-

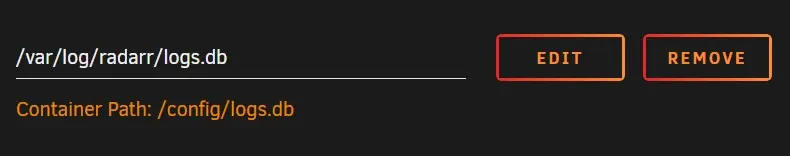

If there’s a

logsfile:-

first run

touch /var/log/<container-name>.logon the host (otherwise Docker will assume you’re mapping a folder) -

Then map it to

/var/log/<container-name>.log

-v /var/log/logfile.log:/var/log/logfile.log -

You may also need to make sure this file is created when you create the tmpfs. Simply update whatever script you’re using to do so:

touch /var/log/<container-name>.log

-

-

Scheduling

A lot of issues with Unraid happen at seemingly random times. In most cases, this is probably due to some system running a scheduled task.

It’s important to understand how your server is scheduled. Do processes with heavy disk I/O run at the same time? Are backups scheduled at the same time as other tasks? All of these can contribute to issues with performance and reliability.

To address this, I wrote my own schedule in a note and then applied the configurations to the server. This helped me visualize the server’s schedule and identify any potential conflicts.

Here are some examples of things to consider:

- When does your parity check run? How long does it take to complete and is it cumulative?

- When does the SSD trim run?

- When does the mover run?

- Do you have Appdata Backup installed? You should, and it should be scheduled!

- You may want to update your torrent client to use different up/down limits during certain time windows

- If you host a Plex or Jellyfin server, consider what hours people are likely to use it

- You can utilize your server’s capacity off peak to do things like transcode your media library with Tdarr

—

I hope a few of these tips will help you get more out of your Unraid server!